Bot Architecture Part 2: Versions & Updates

This is the second installment in a multi-part series about the architecture and design of XenoBot. An introduction to the series and topic is here and the first post is here.

One peculiar aspect of bot development is the frequent out-of-cycle updates that will inevitably drive you insane. You see, when a video game updates, the memory offsets of important information move around. If you're lucky, the function prototypes, packet protocols, and data structures will remain the same; but they often don't. Either way, your bot needs to update as well.

Handling these updates is a major task, and maybe one day I'll write about it. Today, though, we're going to talk about what happens after each update, and how they're delivered to the users.

Multi Versioning

At least in XenoBot's case, there's an extra concern when dealing out updates. There are thousands of emulated servers for Tibia, and very few are actually up-to-date with the latest client. Instead, people spend months (or years!) perfecting their maps, quests, NPCs, monsters, and items in order to release them on a popular version of the game protocol, where they will remain for their lifetime.

Many of these emulated servers last for years, and a good portion of the Tibia population plays them. Capturing that market is essential for profit, but it brings with it an inescapable issue: providing access to older versions of the bot.

For most bots, this just means having dozens of installers linked on a download page and expecting the user to manage the multiple installs. In XenoBot, I went a different route and added multi versioning, which is neatly integrated into the auto updater.

Automatic Updates

Another big issue with game updates is the hailstorm of forum spam: "y bot no work", "where is xeno", "my bot will not inject!", and so on. Rather than signing off each update with a flurry of "please install the latest version" replies (you'd think people would get used to it, but they don't), I decided to make the bot automatically ensure it's always got the most up-to-date information for all existing versions of the bot.

Implementation

As I've already mentioned, there's easy updates (memory offsets only) and hard updates (changes to code, structures, or other important information). Rather than push a new version of the core bot library in the case of the former, I decided I could just push the new memory offsets in a separate file, herein referred to as an .xblua file. For the more complex updates, though, I would need to push the core bot library, herein a .dll file. Every .dll file is accompanied by three more files: spells.xml, items.xml, and library.lua. This set of four files is herein the core package.

In essence, this lead to a many-to-one relationship between the .xblua and .dll files (keyed on a field called offset version), and a one-to-one relationship between the .dll and remaining files (keyed on a field called bot version).

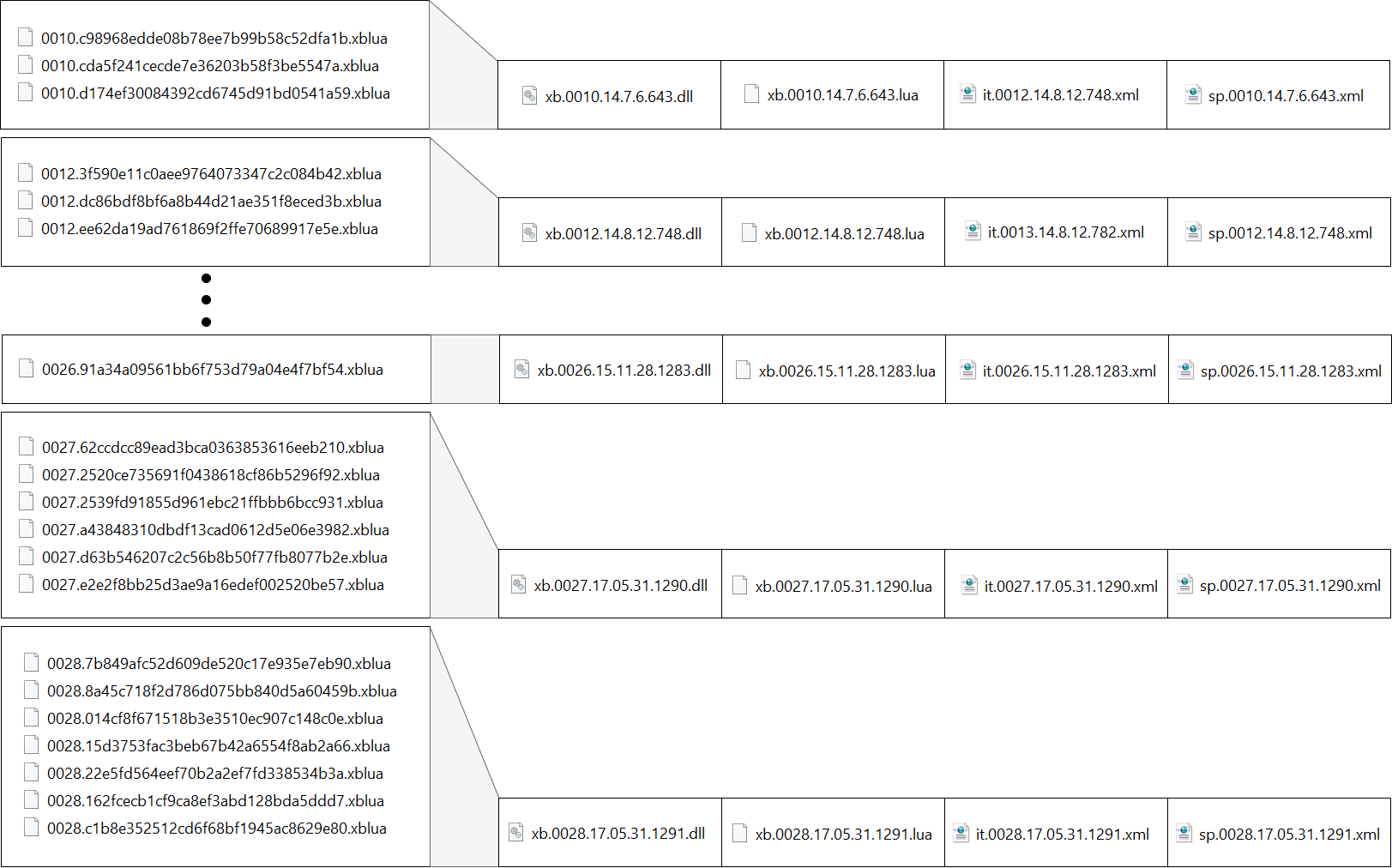

In the following diagram, the .xblua files are named <offset version>.<file md5> and the other files are named <type>.<offset version>.<bot version>.

This set of relationships proved to be really powerful. When only offsets were updated, I could push a new .xblua file without changing anything else. This saved the time, bandwidth, and storage of myself, my servers, and my customers. Additionally, it meant that any new features or bug-fixes could be delivered as a new core package, effectively applying them for all clients with the latest offset versions.

This was immensely helpful, as the most serious--and most populated--emulated servers would do their best to keep up with the current client, typically staying only one or two versions behind. Being able to keep pushing new features and bug fixes to these servers, even on slightly older clients, meant more happy customers.

Of course, in the case of a more complex update, the core package would require an update, which meant severing the tie by introducing a new offset version. This was never ideal, but at least the system allowed multiple offset versions to co-exist.

FWIW, there are currently 62 sets of offsets across only 17 offset versions.

Nick@neutrino $ ls *.xblua | wc -l

62

Nick@neutrino $ ls *.dll | wc -l

17

XenoSuite

This is all facilitated by an application called XenoSuite. Essentially, XenoSuite has the job of waiting for a Tibia client to open, detecting it's running version, locating the proper core package, and injecting the core library into the client.

It's able to do this because each .xblua file contains two important fields: versionMagic and versionAddress. Inside of Tibia's code, there is a function which crafts a network packet telling the game server the version of the client. It looks something like this:

PUSH <imm16 clientVersion>

CALL Tibia.pushPacketInteger

versionAddress is the memory offset of the PUSH statement, and versionMagic is a 4-byte representation of the data at that offset. Since each Tibia client will have a unique version, reading the memory at versionAddress and comparing it to versionMagic is a quick and valid way of determining the client version. That is to say, for each .xblua file, XenoSuite reads memory from the client at versionAddress and checks if it matches versionMagic. If so, it has found a corresponding .xblua file, and it finds the proper core package using by matching offset versions. Then, it injects the core .dll file and uses IPC to notify the injected code of the proper .xblua file to load.

This can all happen automagically--often across updates without the user even noticing--because XenoSuite periodically checks to make sure it has all of the latest files. It does this by querying a web API which returns a text blob:

XBUPDATE

ADDR

0010.4d4e30e84ea66f1ff35fc7b9b8043e15.xblua|2834

<a bunch of file ommitted>

0028.c1b8e352512cd6f68bf1945ac8629e80.xblua|3205

DLL

xb.0010.14.7.6.643.dll|1292624

<a bunch of file ommitted>

xb.0028.17.05.31.1291.dll|1979384

LLIB

xb.0010.14.7.6.643.lua|106098

<a bunch of file ommitted>

xb.0028.17.05.31.1291.lua|229242

IXML

it.0010.14.7.6.643.xml|106858

<a bunch of file ommitted>

it.0028.17.05.31.1291.xml|114283

SXML

sp.0010.14.7.6.643.xml|15678

<a bunch of file ommitted>

sp.0028.17.05.31.1291.xml|20072

XBDONE

It uses the respective start and end tags XBUPDATE and XBDONE to validate a complete response, and uses the ADDR, DLL, LLIB IXML, and SXML tags to identify the types of the following files. Any line which isn't a tag is a formatted as <file name>|<file size in bytes>.

Yeah, I know I'm not using JSON. No, it's not dumb. C++ parses this easier than JSON.

XenoSuite parses this response and builds a list of all of the files it should have. If it has any extra files, they are deleted. If it is missing any files, they are downloaded.

Potential Improvements

For starters, there was no real reason to require a one-to-one relationship between the files in the core package. I did it this way to cut down on relational complexity, but it means there's tons of duplication.

Additionally, it is theoretically possible to add one more layer of abstraction between the .xblua files and the business logic of the .dll file. If all of the game interop was abstracted away to a separate layer, new features and bug fixes could be delivered to all client versions from a single codebase. Sadly, this is way harder than it sounds, especially when tacking it on to a massive project that wasn't exactly designed amazingly to start with; XenoBot was started when I was 15, after all, and the older code blatantly shows that. Even if I wanted to do this from scratch, there's some massive hurdles to overcome:

- It's very hard to make good abstractions when you don't fully understand the nature of what you're abstracting. The game client isn't an API, it's a blob of bytes that can change in any way at any time. What you think to abstract today might not make sense in a year, and it can break the entire system in the worst case.

- Many bot features are designed around new game content, and having far-reaching backwards compatibility means either nuetering new functionality to make sense for older versions or completely disabling it on older versions, which defeats the purpose.

- It's an absolute nightmare for testing. And since bots are already a nightmare for testing, it's nightmare inception. This is what nightmare inception looks like. It's not fun:

All in all, I love this system. It's not as good as it can be, but it's better than what any of my competitors have, and it has worked flawlessly for going on four years. It's a really interesting niche case that would seem absurd in the context of most software, so I got the fun of designing it from the ground up and seeing it work, which is always a great feeling.